Pinbot

A self-playing pinball machine.

As Project Lead, I led a team of 6 to teach a pinball machine to play itself using reinforcement learning.

In Fall of 2024, I formed a team to achieve a long-term dream: build a real, self-playing pinball machine. Through CMU’s 16-831, Intro to Robot Learning, we developed a working demo in simulation, designed and wired in a custom controller PCB, built out a working vision stack, shaped the reinforcement learning algorithm, and more.

👀 Vision Stack

The vision stack gives our machine “eyes.” Currently, three cameras are rigged around the machine. OpenCV was the core for all image processing.

Playfield Camera

A camera is mounted to a mic stand to capture a bird’s-eye view of the playfield. Aruco tags are employed to crop and correct for skewed perspectives by identifying parallel lines on the rectangular field and performing a perspective warp for a corrected view.

Scoreboard Camera

A tripod-mounted camera points at the four sets of seven-segment score displays on the backbox. Camera settings (exposure, gain, brightness, contrast, and saturation) filter out all other light, producing a nearly binary image of the scores. PyTesseract is used to read the scores, with some additional preprocessing like thresholds and binary dilation to improve recognition accuracy. The score blinks during gameplay; our solution was to cache the last 10 scores, and use the mode as the current score.

Start Button Camera

A third camera attached directly to the machine views the start button. This detects when the start button is flashing, which indicates that the game is over, prompting the agent to begin the next game.

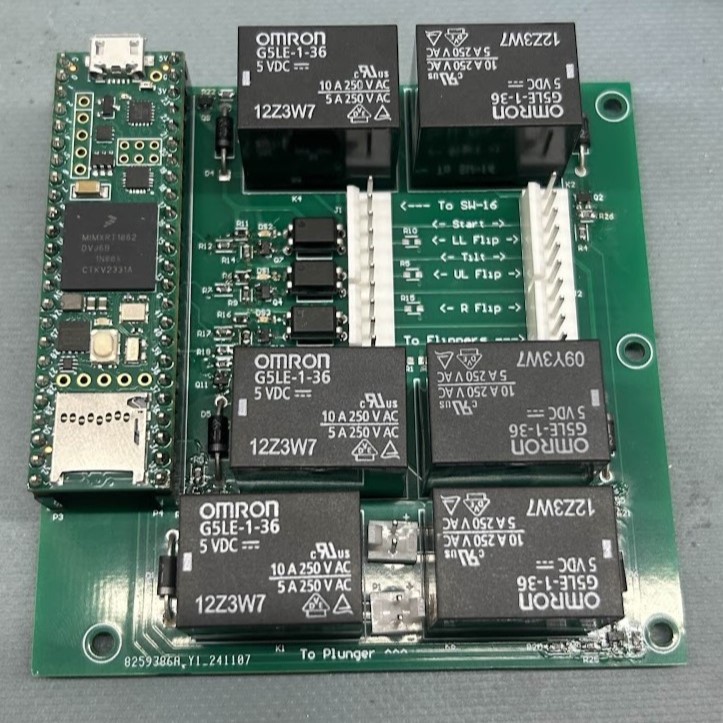

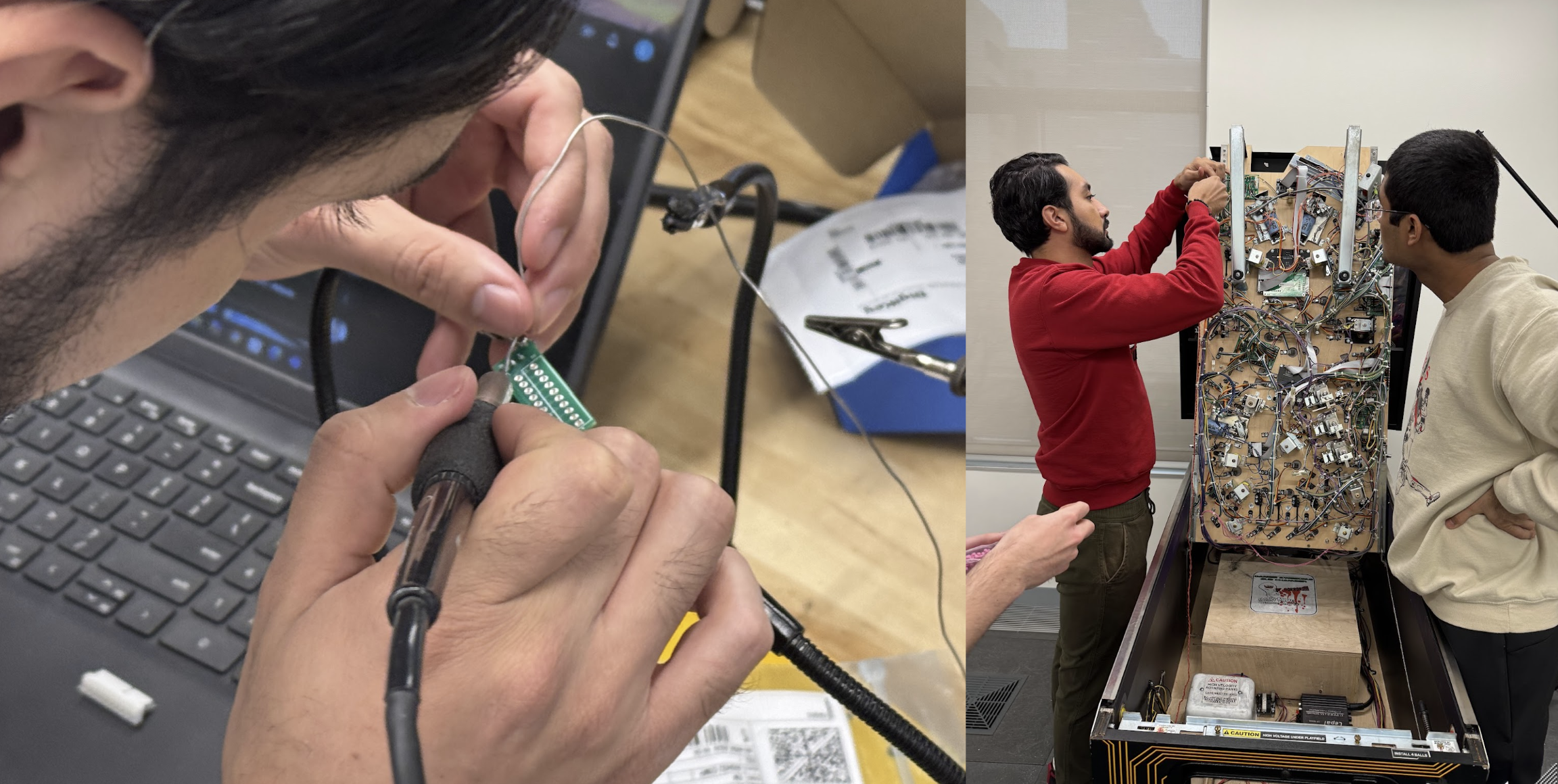

⚡ Custom Electronics

To access the game flippers, start button, and launcher signals, we utilized a Teensy microcontroller and a custom PCB board, connected via a serial interface. This setup enabled remote actuation of these components without relying on the physical elements. With the assistance of Spooky Pinball, we identified the correct control board, ‘SW-16,’ for handling input signals. Referencing the machine’s wiring diagrams, we installed our custom PCB into the system.

To facilitate external control, we developed an interface program consisting of two interlinked codes: one running on Arduino for direct hardware interactions and the other in Python for UI and high-level logic. The two codes communicated via a serial connection, enabling Python to send commands and receive updates from the Arduino. This program allowed the computer keyboard to remotely actuate the flippers, plunger, and start button.

🕹️ Simulator

To accelerate the training process, we chose to first deploy in simulation. Since there are hundreds of different pinball games, each with their own layout, we sought one matching our own system – fortunately enough, VPinWorkshop was kind enough to develop this very thing, using the Visual Pinball Engine.

To transfer data to our AI agent, we modified the game code to write the current score and ball number to disk. This is a stand-in for the OCR methods that would be used in the physical system. Beyond this, we modified the game to export the ball position and velocity in a similar manner.

🧠 Building The AI

To train the AI, we used Unity’s ML-Agents Package with the Proximal Policy Optimization (PPO) algorithm. Our initial codebase was built off of Microsoft’s 3DPinballAi repo – huge thank you to Elliot Wood for creating this!

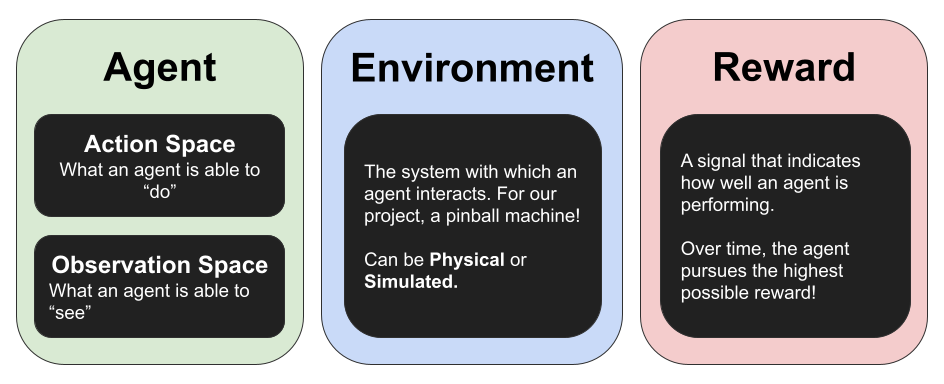

We broke down the RL problem into three chunks: shaping the Agent, Environment, and Reward.

Agent

Our agent’s action space is relatively simple. 20 times a second, it chooses to take one of four discrete actions:

- Idle

- Press Left Flipper

- Press Right Flipper

- Press Both Flippers

The observation space is similarly straightforward, containing the following elements:

- Playfield Image, grayscaled

- Ball X Position, normalized

- Ball Y Position, normalized

- Ball X Velocity, normalized

- Ball Y Velocity, normalized

Currently, we are getting the ball’s position directly from the simulation. For the physical integration, we may develop a CV model to search for ball-like features and extract that information.

Environment

The environment can either be the physical game or the simulator. For information on the latter, see the above section.

Reward

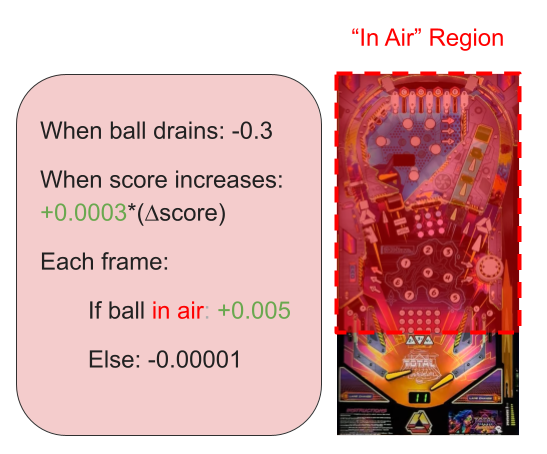

Although pinball already has a built-in reward system (the game score), additional reward shaping was needed for the agent to learn properly.

First, for numeric stability, the reward signal should vary between -1 (worst possible outcome) and 1 (ideal outcome). For this, the current score is divided by the expected maximum achievable by the agent.

Second, to discentivize draining, a high penality (-0.3) is applied whenever a ball is lost.

Third, to encourage keeping the ball “alive,” a small bonus is awarded every frame that the ball is above the flipper region threshold.

Finally, to prevent stalling, a small penalty is applied for every frame that the ball is below that region, i.e. at the flippers.

🏆 Results

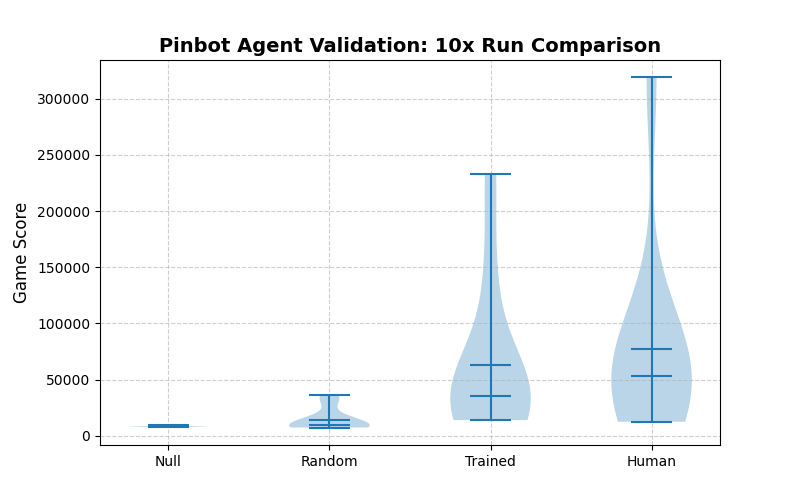

After training for 90k steps (about 6.5 hours), the model was evaluated against three baselines: a human player (myself), a randomized agent, and no agent. 10 cases were run for each scenario, with aggregated results below.

As can be seen, the AI is clearly learning, with substantially improved performance relative to the initial randomized agent. It is beginning to near a human player level, although more tuning is needed to reach that point.

⏭️ Next Steps

We are currently working on tuning the AI model further. Once we are happy with the performance, we will perform transfer learning onto the physical machine. This integration will require some additional work, namely a method to detect ball loss and visual ball position tracking.

We will seek to benchmark the system against pro-level human players, potentially at Pinburgh 2025. Finally, we will aim to put out a publication!

Acknowledgements

So many folks helped bring the project to this point. Special thanks to:

- Sahil T. Chaudhary & Richa Mohta: Software

- Albert Xiao: Computer Vision

- Jarrod Homer & Luis F. Cuenca: Electronics

- Professor Guanya Shi, Jess Butterbaugh, Kaitlyn Buss: CMU Faculty support

- Spooky AJ, Spooky Tory, Gerry Stellenberg: Technical Support

- Elliot Wood: Technical guidance and code foundation

- Doug Polka and PGHPinball for lending the machine!